Since 2017, in what spare time I have (ha!), I help my colleague Eric Berger host his Houston-area weather forecasting site, Space City Weather. It’s an interesting hosting challenge—on a typical day, SCW does maybe 20,000–30,000 page views to 10,000–15,000 unique visitors, which is a relatively easy load to handle with minimal work. But when severe weather events happen—especially in the summer, when hurricanes lurk in the Gulf of Mexico—the site’s traffic can spike to more than a million page views in 12 hours. That level of traffic requires a bit more prep to handle.

For a very long time, I ran SCW on a backend stack made up of HAProxy for SSL termination, Varnish Cache for on-box caching, and Nginx for the actual web server application—all fronted by Cloudflare to absorb the majority of the load. (I wrote about this setup at length on Ars a few years ago for folks who want some more in-depth details.) This stack was fully battle-tested and ready to devour whatever traffic we threw at it, but it was also annoyingly complex, with multiple cache layers to contend with, and that complexity made troubleshooting issues more difficult than I would have liked.

So during some winter downtime two years ago, I took the opportunity to jettison some complexity and reduce the hosting stack down to a single monolithic web server application: OpenLiteSpeed.

Out with the old, in with the new

I didn’t know too much about OpenLiteSpeed (“OLS” to its friends) other than that it's mentioned a bunch in discussions about WordPress hosting—and since SCW runs WordPress, I started to get interested. OLS seemed to get a lot of praise for its integrated caching, especially when WordPress was involved; it was purported to be quite quick compared to Nginx; and, frankly, after five-ish years of admining the same stack, I was interested in changing things up. OpenLiteSpeed it was!

The first significant adjustment to deal with was that OLS is primarily configured through an actual GUI, with all the annoying potential issues that brings with it (another port to secure, another password to manage, another public point of entry into the backend, more PHP resources dedicated just to the admin interface). But the GUI was fast, and it mostly exposed the settings that needed exposing. Translating the existing Nginx WordPress configuration into OLS-speak was a good acclimation exercise, and I eventually settled on Cloudflare tunnels as an acceptable method for keeping the admin console hidden away and notionally secure.

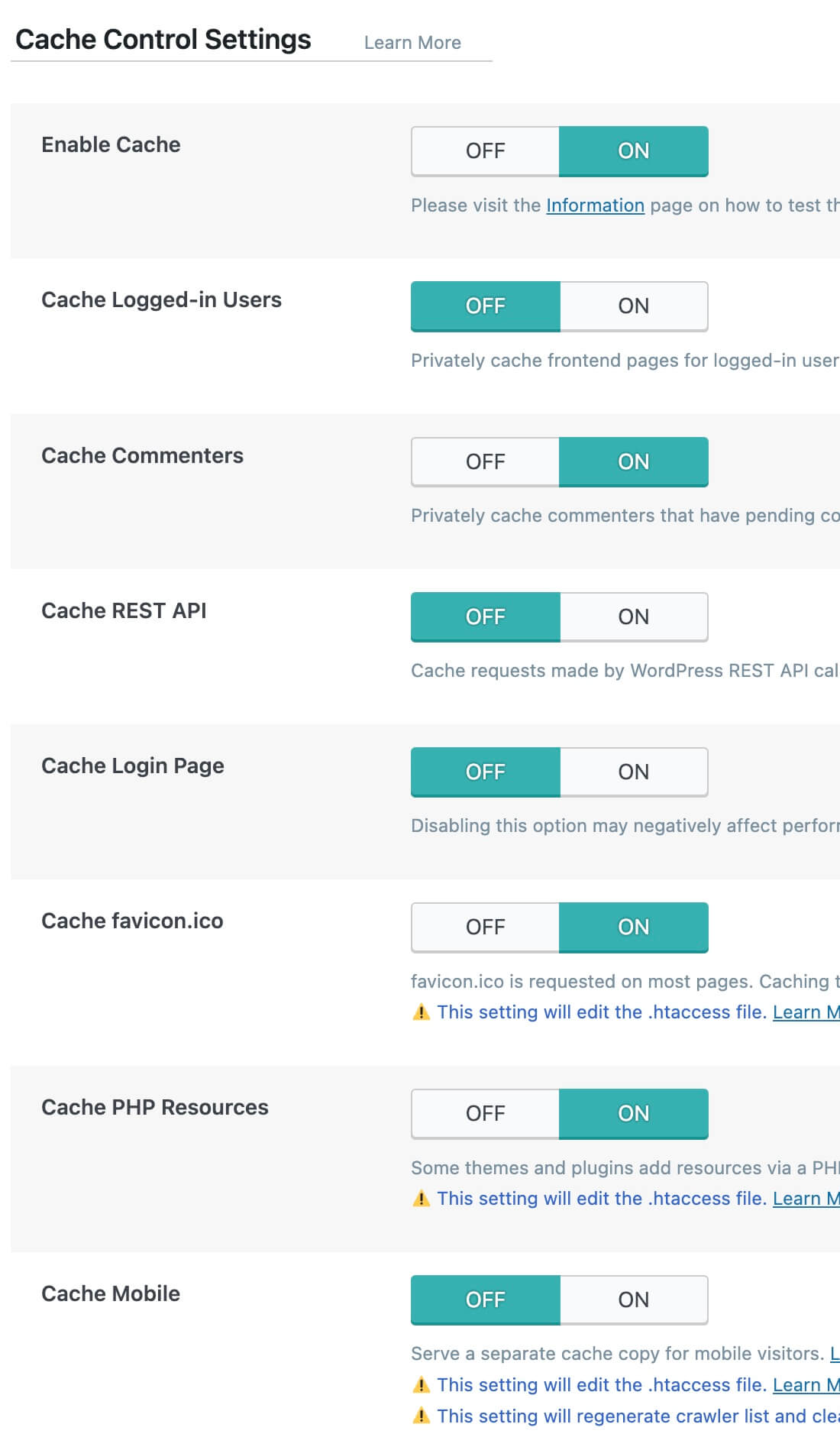

The other major adjustment was the OLS LiteSpeed Cache plugin for WordPress, which is the primary tool one uses to configure how WordPress itself interacts with OLS and its built-in cache. It’s a massive plugin with pages and pages of configurable options, many of which are concerned with driving utilization of the Quic.Cloud CDN service (which is operated by LiteSpeed Technology, the company that created OpenLiteSpeed and its for-pay sibling, LiteSpeed).

Getting the most out of WordPress on OLS meant spending some time in the plugin, figuring out which of the options would help and which would hurt. (Perhaps unsurprisingly, there are plenty of ways in there to get oneself into stupid amounts of trouble by being too aggressive with caching.) Fortunately, Space City Weather provides a great testing ground for web servers, being a nicely active site with a very cache-friendly workload, and so I hammered out a starting configuration with which I was reasonably happy and, while speaking the ancient holy words of ritual, flipped the cutover switch. HAProxy, Varnish, and Nginx went silent, and OLS took up the load.

Article From & Read More ( I abandoned OpenLiteSpeed and went back to good ol' Nginx - Ars Technica )https://ift.tt/SAVP3m6

Technology

No comments:

Post a Comment