Google just changed the privacy policy of its apps to let you know that it will use all of your public data and everything else on the internet to train its ChatGPT rivals. There’s no way to oppose Google’s change other than to delete your account. Even then, anything you’ve ever posted online might be used to train Google’s Bard and other ChatGPT alternatives.

Google’s privacy policy change should be a stark reminder not to overshare with any AI chatbots. Below, I’ll give a few examples of the information you should keep from AI until those programs can be trusted with your privacy–if that ever comes to pass.

We’re currently in the wild west of generative AI innovation when it comes to regulation. But in due time, governments around the world will institute best practices for generative AI programs to safeguard user privacy and protect copyrighted content.

There will also come a day when generative AI works on-device without reporting back to the mothership. Humane’s Ai Pin could be one such product. Apple’s Vision Pro might be another, assuming Apple has its own generative AI product on the spatial computer.

Until then, treat ChatGPT, Google Bard, and Bing Chat like strangers in your home or office. You wouldn’t share personal information or work secrets with a stranger.

I’ve told you before you should not share personal details with ChatGPT, but I’ll expand below on the kind of information that constitutes sensitive information generative AI companies shouldn’t get from you.

Personal information that can identify you

Try your best to prevent sharing personal information that can identify you, like your full name, address, birthday, and social security number, with ChatGPT and other bots.

Remember that OpenAI implemented privacy features months after releasing ChatGPT. When enabled, that setting lets you prevent your prompts from reaching ChatGPT. But that’s still insufficient to ensure your confidential information stays private once you share it with the chatbot. You might disable that setting, or a bug might impact its effectiveness.

The problem here isn’t that ChatGPT will profit from that information or that OpenAI will do something nefarious with it. But it will be used to train the AI.

More importantly, hackers attacked OpenAI, and the company suffered a data breach in early May. That’s the kind of accident that might lead to your data reaching the wrong people.

Sure, it might be hard for anyone to find that particular information, but it’s not impossible. And they can use that data for nefarious purposes, like stealing your identity.

Usernames and passwords

What hackers want most from data breaches is login information. Usernames and passwords can open unexpected doors, especially if you recycle the same credentials for multiple apps and services. On that note, I’ll remind you again to use apps like Proton Pass and 1Password that will help you manage all your passwords securely.

While I dream about telling an operating system to log me into an app, which will probably be possible with private, on-device ChatGPT versions, absolutely do not share your logins with generative AI. There’s no point in doing it.

Financial information

There’s no reason to give ChatGPT personal banking information either. OpenAI will never need credit card numbers or bank account details. And ChatGPT can’t do anything with it. Like the previous categories, this is a highly sensitive type of data. In the wrong hands, it can damage your finances significantly.

On that note, if any app claiming to be a ChatGPT client for a mobile device or computer asks you for financial information, that might be a red flag that you’re dealing with ChatGPT malware. Under no circumstance should we provide that data. Instead, delete the app, and get only official generative AI apps from OpenAI, Google, or Microsoft.

Work secrets

In the early days of ChatGPT, some Samsung employees uploaded code to the chatbot. That was confidential information that reached OpenAI’s servers. This prompted Samsung to enforce a ban on generative AI bots. Other companies followed, including Apple. And yes, Apple is working on its own ChatGPT-like products.

Despite looking to scrape the internet to train its ChatGPT rivals, Google is also restricting generative AI use at work.

This should be enough to tell you that you should keep your work secrets secret. And if you need ChatGPT’s help, you should find more creative ways to get it than spilling work secrets.

Health information

I’m leaving this one for last, not because it’s unimportant, but because it’s complicated. I’d advise against sharing health data in great detail with chatbots.

You might want to give these bots prompts containing “what if” scenarios of a person exhibiting certain symptoms. I’m not saying to use ChatGPT to self-diagnose your illnesses now. Or to research others. We’ll reach a point in time when generative AI will be able to do that. Even then, you should not give ChatGPT-like services all your health data. Not unless they’re personal, on-device, AI products.

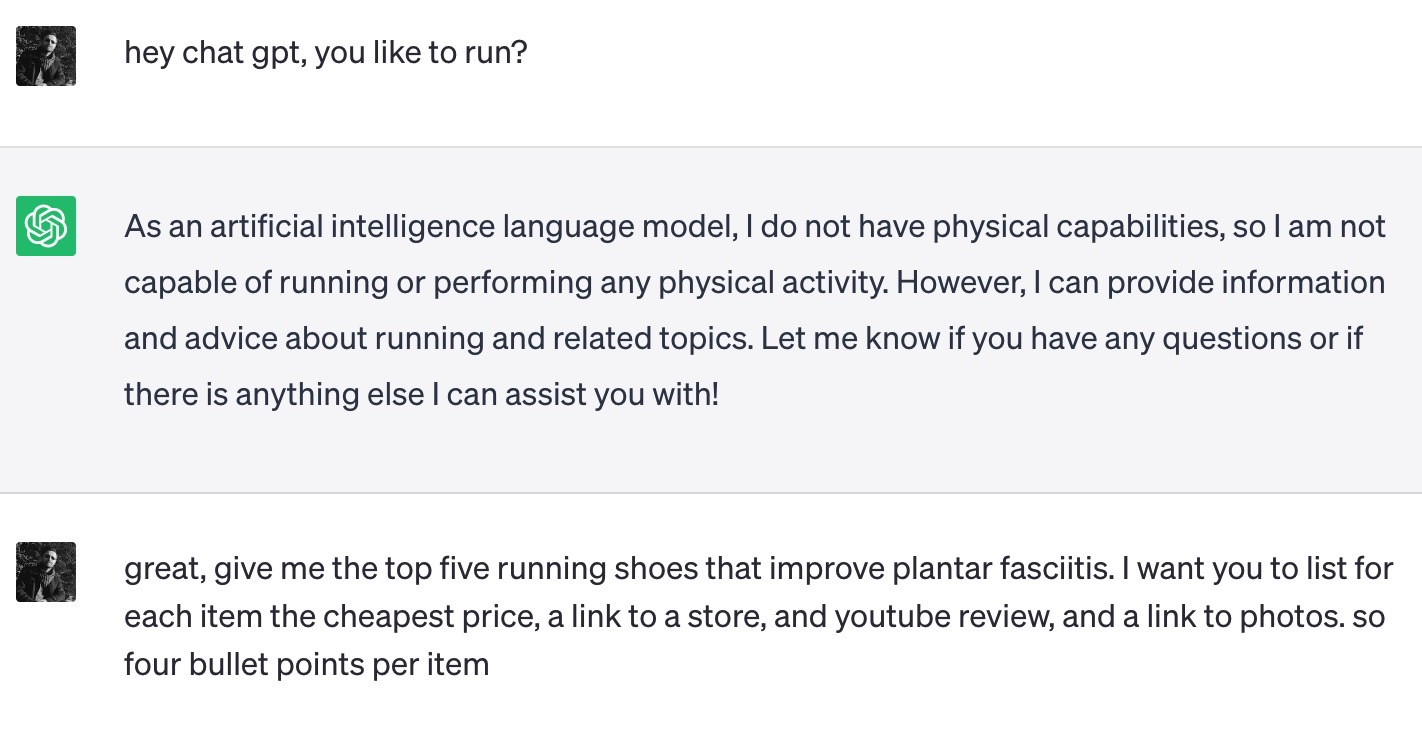

For example, I used ChatGPT to find running shoes that would address certain medical conditions without oversharing health details about me.

Also, there’s another category of health data here: your most personal thoughts. Some people might rely on chatbots for therapy instead of actual mental health professionals. It’s not for me to say whether that’s the right thing to do. But I will repeat the overall point I’m making here. ChatGPT and other chatbots do not provide privacy that you can trust.

Your personal thoughts will reach the servers of OpenAI, Google, and Microsoft. And they’ll be used to train the bots.

While we might reach a point in time when generative AI products might also act as personal psychologists, we’re not there yet. If you must talk to generative AI to feel better, you should be wary of what information you share with the bots.

ChatGPT isn’t all-knowing

I’ve covered before the kind of information ChatGPT can’t help you with. And the prompts it refuses to answer. I said back then that the data programs like ChatGPT provide isn’t always accurate.

I’ll also remind you that ChatGPT and other chatbots can give you the wrong information. Even regarding health matters, whether it’s mental health or other illnesses. So you should always ask for sources for the replies to your prompts. But never be tempted to provide more personal information to the bots in the hope of getting answers that are better tailored to your needs.

Finally, there’s the risk of providing personal data to malware apps posing as generative AI programs. If that happens, you might not know what you did until it’s too late. Hackers might already employ that personal information against you.

Article From & Read More ( 5 things you should never share with ChatGPT - BGR )https://ift.tt/WwmbFPx

Technology

No comments:

Post a Comment