AI startup Stability AI continues to refine its generative AI models in the face of increasing competition — and ethical challenges.

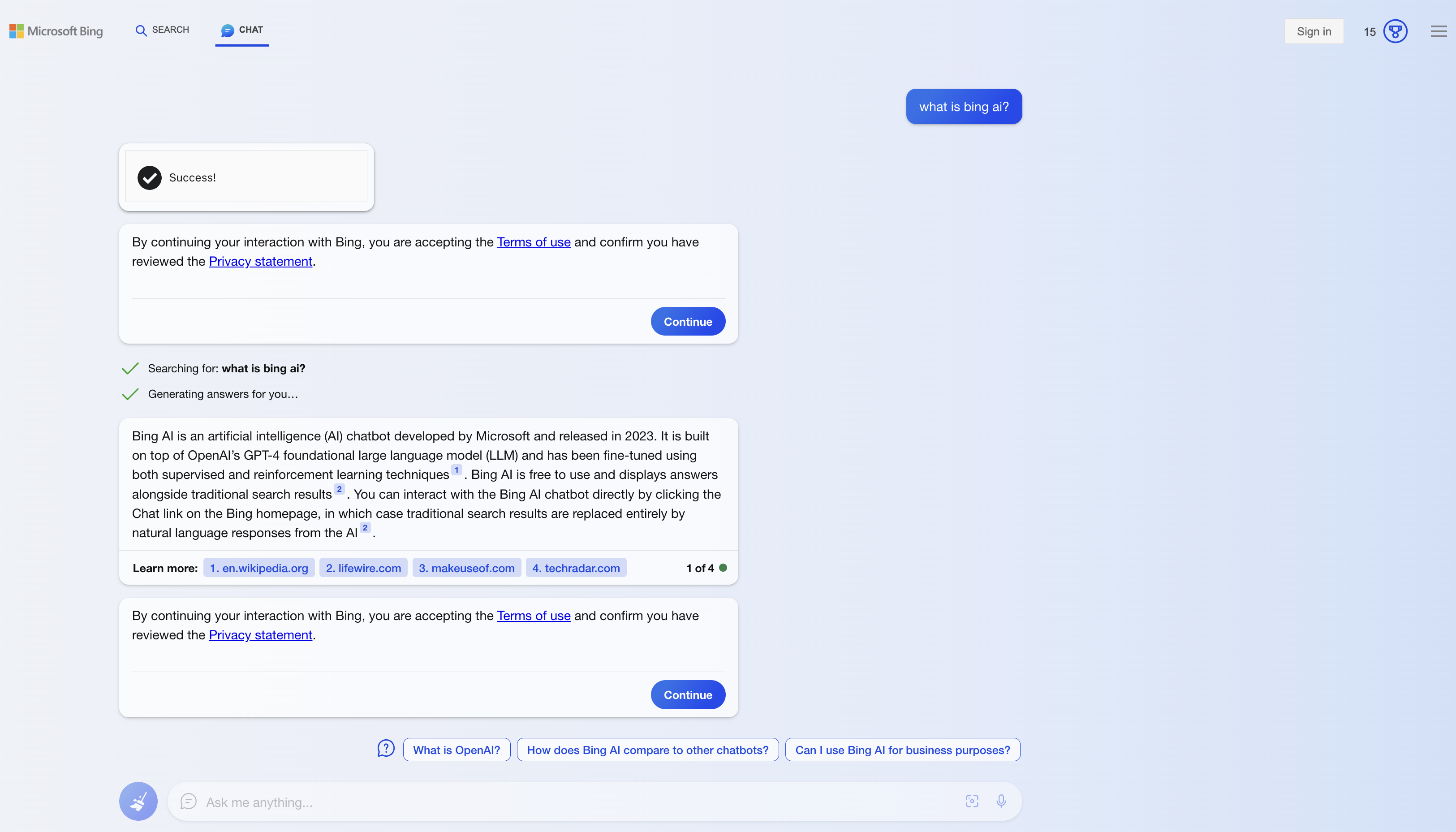

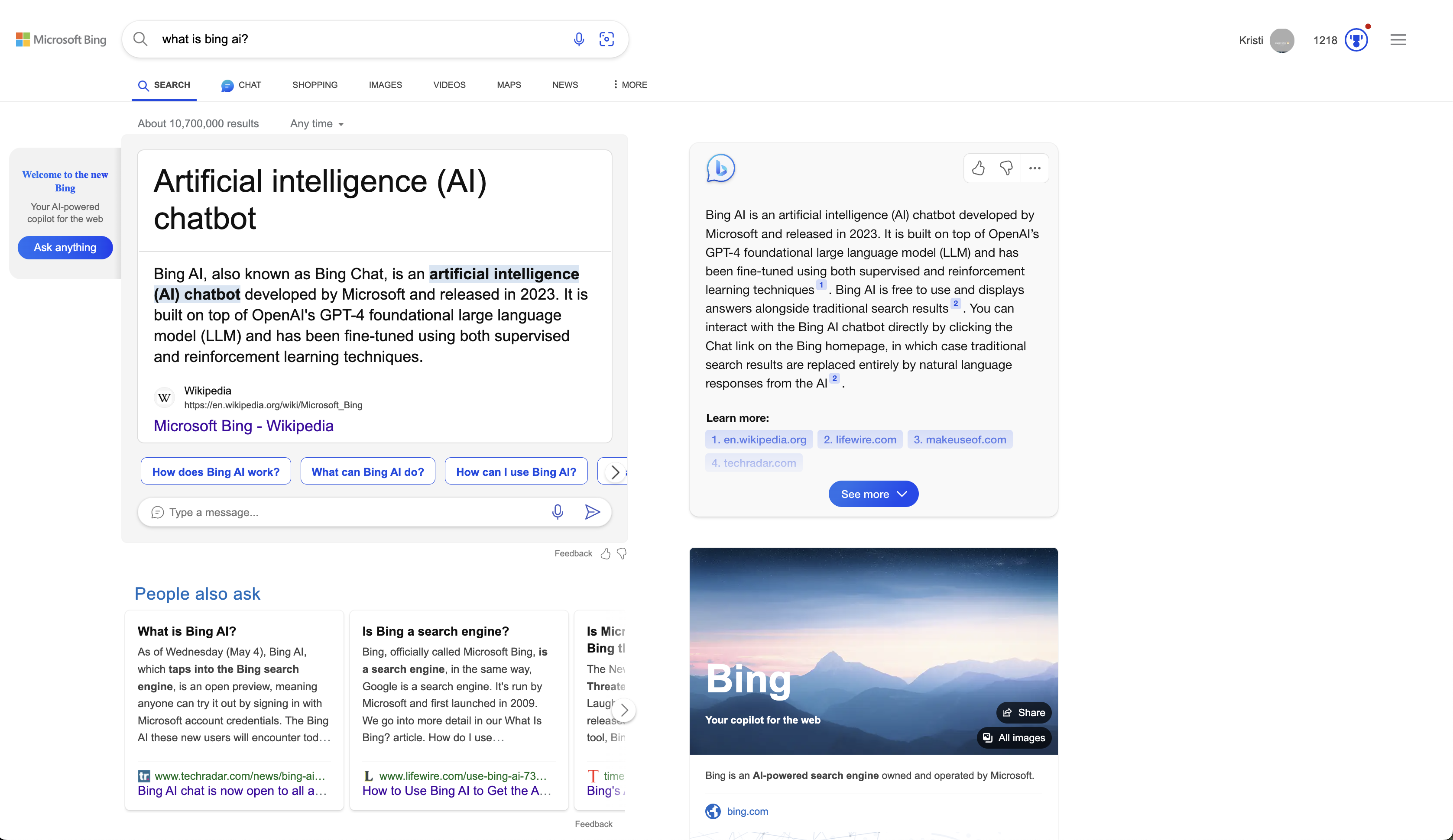

Today, Stability AI announced the launch of Stable Diffusion XL 1.0, a text-to-image model that the company describes as its “most advanced” release to date. Available in open source on GitHub in addition to Stability’s API and consumer apps, Clipdrop and DreamStudio, Stable Diffusion XL 1.0 delivers “more vibrant” and “accurate” colors and better contrast, shadows and lighting compared to its predecessor, Stability claims.

In an interview with TechCrunch, Joe Penna, Stability AI’s head of applied machine learning, noted that Stable Diffusion XL 1.0, which contains 3.5 billion parameters, can yield full 1-megapixel resolution images “in seconds” in multiple aspect ratios. “Parameters” are the parts of a model learned from training data and essentially define the skill of the model on a problem, in this case generating images.

The previous-gen Stable Diffusion model, Stable Diffusion XL 0.9, could produce higher-resolution images as well, but required more computational might.

“Stable Diffusion XL 1.0 is customizable, ready for fine-tuning for concepts and styles,” Penna said. “It’s also easier to use, capable of complex designs with basic natural language processing prompting.”

Stable Diffusion XL 1.0 is improved in the area of text generation, in addition. While many of the best text-to-image models struggle to generate images with legible logos, much less calligraphy or fonts, Stable Diffusion XL 1.0 is capable of “advanced” text generation and legibility, Penna says.

And, as reported by SiliconAngle and VentureBeat, Stable Diffusion XL 1.0 supports inpainting (reconstructing missing parts of an image), outpainting (extending existing images) and “image-to-image” prompts — meaning users can input an image and add some text prompts to create more detailed variations of that picture. Moreover, the model understands complicated, multi-part instructions given in short prompts, whereas previous Stable Diffusion models needed longer text prompts.

An image generated by Stable Diffusion XL 1.0.

“We hope that by releasing this much more powerful open source model, the resolution of the images will not be the only thing that quadruples, but also advancements that will greatly benefit all users,” he added.

But as with previous versions of Stable Diffusion, the model raises sticky moral issues.

The open source version of Stable Diffusion XL 1.0 can, in theory, be used by bad actors to generate toxic or harmful content, like nonconsensual deepfakes. That’s partially a reflection of the data that was used to train it: millions of images from around the web.

Countless tutorials demonstrate how to use Stability AI’s own tools, including DreamStudio, an open source frontend for Stable Diffusion, to create deepfakes. Countless others show how to fine-tune the base Stable Diffusion models to generate porn.

Penna doesn’t deny that abuse is possible — and acknowledges that the model contains certain biases, as well. But he added that Stability AI’s taken “extra steps” to mitigate harmful content generation by filtering the model’s training data for “unsafe” imagery, releasing new warnings related to problematic prompts and blocking as many individual problematic terms in the tool as possible.

Stable Diffusion XL 1.0’s training set also includes artwork from artists who’ve protested against companies including Stability AI using their work as training data for generative AI models. Stability AI claims that it’s shielded from legal liability by fair use doctrine, at least in the U.S. But that hasn’t stopped several artists and stock photo company Getty Images from filing lawsuits to stop the practice.

Stability AI, which has a partnership with startup Spawning to respect “opt-out” requests from these artists, says that it hasn’t removed all flagged artwork from its training data sets but that it “continues to incorporate artists’ requests.”

“We are constantly improving the safety functionality of Stable Diffusion and are serious about continuing to iterate on these measures,” Penna said. “Moreover, we are committed to respecting artists’ requests to be removed from training data sets.”

To coincide with the release of Stable Diffusion XL 1.0, Stability AI is releasing fine-tuning feature in beta for its API that’ll allow users to use as few as five images to “specialize” generation on specific people, products and more. The company is also bringing Stable Diffusion XL 1.0 to Bedrock, Amazon’s cloud platform for hosting generative AI models — expanding on its previously-announced collaboration with AWS.

The push for partnerships and new capabilities comes as Stability suffers a lull in its commercial endeavors — facing stiff competition from OpenAI, Midjourney and others. In April, Semafor reported that Stability AI, which has raised over $100 million in venture capital to date, was burning through cash — spurring the closing of a $25 million convertible note in June and an executive hunt to help ramp up sales.

“The latest SDXL model represents the next step in Stability AI’s innovation heritage and ability to bring the most cutting-edge open access models to market for the AI community,” Stability AI CEO Emad Mostaque said in a press release. “Unveiling 1.0 on Amazon Bedrock demonstrates our strong commitment to work alongside AWS to provide the best solutions for developers and our clients.”

Adblock test (Why?)

Article From & Read More ( Stability AI releases its latest image-generating model, Stable Diffusion XL 1.0 - TechCrunch )

https://ift.tt/2xBjrEz

Technology

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/23925996/acastro_STK054_01.jpg)